How to Create Your First Hyper-Localization Experiment

Why your best international growth ideas should fail - and how to learn from them

International growth teams must become truly world-class at designing and running experiments. They serve to unearth game-changing ideas that can unlock exponential user and revenue growth, generate compelling data for business cases and help build playbooks for scaling growth efforts to new markets.

Experimentation is no substitute for good judgment. Sub-par experiences, user journey gaps and US-centric designs do not require experiments - they simply require fixing.

When approaching the work of international growth, there are 3 broad categories to consider:

Removing friction / unblocking flows for international users (lead generation flows, sign up experiences, holistic end-to-end localization)

Adapting experiences to meet local user requirements (payments, currency, integrations, functionality)

Experimentation to identify key growth levers (across acquisition, adoption, conversion, retention)

Why Experiment?

For situations where your ideas need data-backed validation, experimentation is the best approach.

You may believe that tailoring a particular experience for local requirements will increase engagement, but real-world data will prove (or disprove) it in practice. You might wish to reduce prices to drive paid conversion rates in certain markets, but a focused experiment will reveal the true price elasticity of your product and avoid any costly mistakes.

Experiments provide a bridge between ideas and new experiences.

The Exception: Strategic Buy-in Experiments

In certain situations, experiments can be valuable in building supportive data and rationale to request investment into international expansion and growth. In this scenario, running a tightly-scoped experiment to “prove” the value of international growth or localization can be highly impactful.

In these cases, the outcome is frequently a foregone conclusion (invest in localization and growth will follow), but the real purpose is to deliver incontrovertible evidence to senior leadership.

Embrace Failure!

Experiments work best when you are unsure of the outcome. If your team is running experiments with a 80% success rate, it means one of two things:

You are wasting time experimenting instead of simply improving experiences

You are pursuing conservative, low-risk experiments instead of seeking more ambitious ideas

Low-risk experiments are usually not worth the effort. They are typically characterised by low upside potential and can reinforce a conservative mindset among the team.

While everyone hopes for a successful outcome, failed experiments often yield the biggest insights. Finding out what doesn’t work in a market is priceless. Often, a failed experiment will deliver counter-intuitive results that will inform future improvements and experiments. Here are two examples of failed experiments I’ve run, yet derived critical insights from:

Removing too-much friction from a signup flow degrades the quality of users and results in lower product adoption rates

Lowering subscription prices failed to increase paid conversion rates and simply lowered total market revenue

Failed experiments should be used to shape and hone strategy. Often, a failed experiment will be re-run with slightly different parameters or a different target audience. What fails in one market can be repackaged and run as a brand new experiment in another.

Where to Start

Hyper-localization presents the ideal category for running international experiments. Hyper-localization refers to tailoring the user experience to align with the local requirements and context of a target audience.

Consider the difference between localizing a marketing page for accounting software into French and tailoring the same page for a French-speaking financial director, living in Quebec.

For companies starting out on their experimental journey, the initial goal is not to succeed at all costs. Building the muscle to run experiments is a worthy and compelling target.

Let’s walk through an example of a hyper-localized experiment from start to finish.

1. Gather Insights

The best experiment ideas come from observation and interaction with customers. The intersection of user behaviour, experience friction and desired goal will yield a raft of insights. Start systematically gathering these insights across a range of qualitative and quantitative sources:

Customer feedback: look for feedback trends that persist in select markets

Customer interviews: uncover real-world context, requirements and preferences by speaking directly to customers in-market

Market research: what social media platforms dominate in the market, how do customers learn about products, what are their buying styles and support needs?

Experience audits: run end-to-end website and marketing audits in-language and in-market to find experience gaps and pitfalls

Seasonal data: what do you notice about seasonal signup, usage and buying trends?

Drop-off points: identify the drop-off points in user journeys for each market

Conversion & renewal data: pay attention to paid conversion and renewal data, as they will reveal monetization improvement opportunities

It’s imperative to develop a keen market sense that fosters a highly opinionated point of view for what experience improvements are required to elevate the experience in your priority markets. Once this is established, you have the framework to make informed predictions for your experiments.

2. Develop Hypothesis

Through brainstorming, ideation or other group exercises, create a collection of discrete experiment hypotheses. These do not need to be elaborately constructed, but should be easily understood and allow for evaluation and consideration.

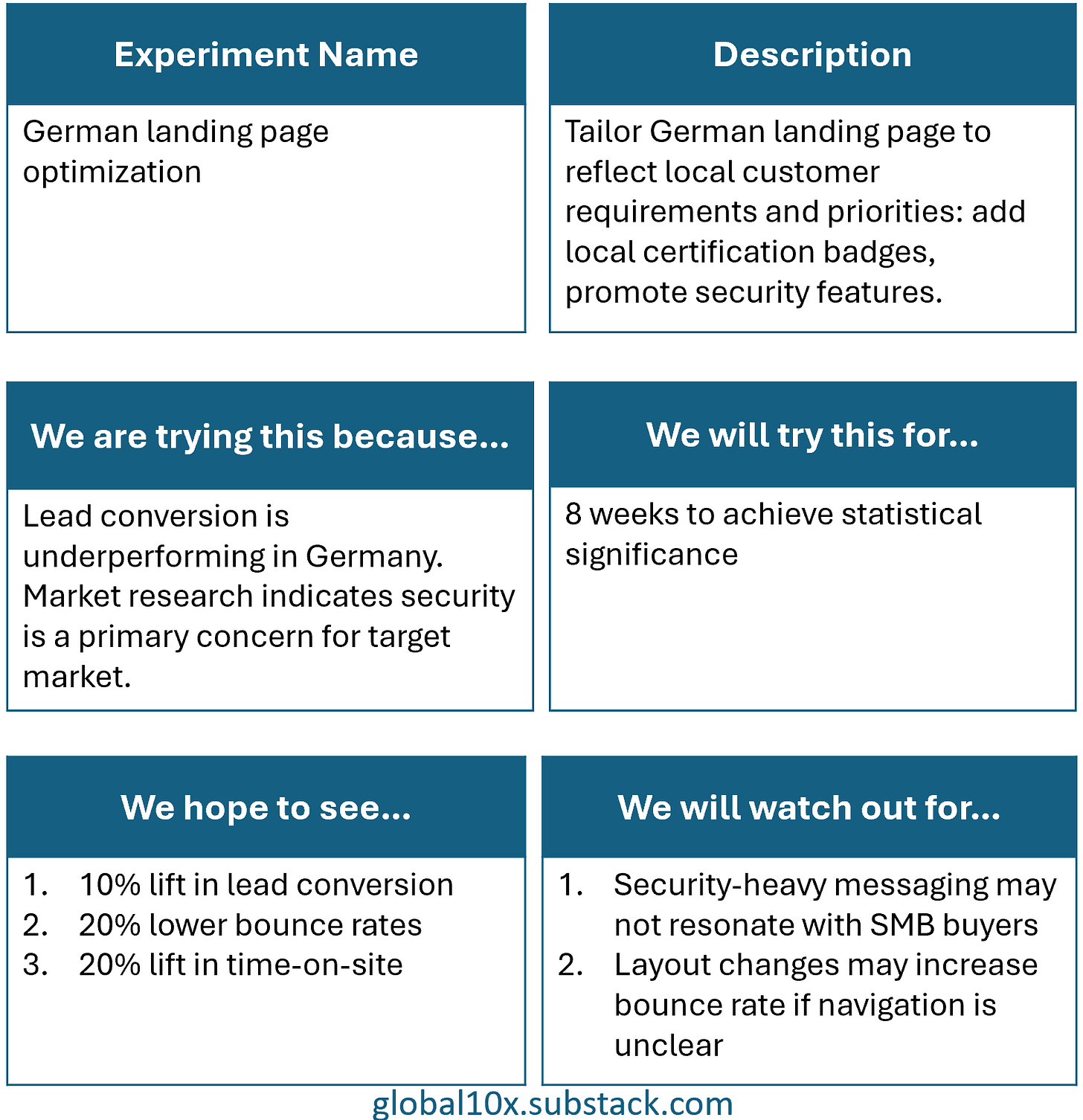

Use a consistent template to describe the experiments. At this stage, you don’t need extensive designs or cohort analysis; it’s sufficient to explain the what and why of each experiment.

The following experiment template is a simple way to document your hypothesis:

Here’s how the template can be used in practice, with a real-world example of a hyper-localization experiment:

3. Rank Experiments

Given the finite constraints of an international team, it’s important to select experiments that are (1) high-potential, and (2) feasible to build. A consistent ranking methodology will provide the basis for an experiment backlog.

While each individual experiment should be evaluated on its own merits, consider the broader set of goals and focus areas:

What are your priority markets?

Who are your target customers in those markets?

What areas of focus will you prioritize - for example:

Marketing: awareness/acquisition/lead generation

Product: adoption/conversion/renewal/satisfaction

What resources are available to you to execute experiments?

It’s better to begin your experiment journey with a narrow focus and exceptionally clear measures of success. Aim too broad and the results will be diffuse or too vague to draw conclusions from.

For each experiment hypothesis, a simple ranking approach works well, such as the RICE method:

Reach: how much of your target audience will this impact? Define this according to your focus (revenue / users / etc.)

Impact: what is the quantitative improvement expected? Create a consistent weighting system to reflect relative impact.

Confidence: how confident are you in the hypothesis? Don’t over-engineer this: aim for high (100%), medium (80%) or low (50%).

Effort: how much work is required to create and execute the experiment? This is typically evaluated based on number of person-months required.

To calculate the score, multiply the reach x impact x confidence and divide by effort.

Let’s consider how to apply the RICE method for our German landing page experiment:

Reach (500 monthly visitors) × Impact (Medium = 0.8) × Confidence (High = 100%) / Effort (0.5 person-months) = Score of 800

With a consistent scoring model, you can evaluate your hypotheses for their relative benefits and create an experimental roadmap to follow.

4. Build Experiment

Before building anything, define what success looks like and what risks you’re willing to accept.

Set Your Success Criteria

Be explicit about your targets. If you aim for 20% improvement in a metric but achieve only 5%, will you still ship the change to production? If the experiment brings short-term improvement (lead conversion grows) but longer-term degradation (customer churn grows), how will you deal with this?

Your experiment template should already outline your desired outcomes with quantified targets. Now decide your threshold for success. Will you ship changes that hit 50% of your target? 75%? This prevents post-hoc rationalization and keeps your team honest about results.

Establish Guardrails

While your experiment aims for a positive outcome, it’s critical to define the negative outcomes that would trigger an immediate halt. At what stage will you stop an experiment if it causes material damage to user signups, revenue, or customer sentiment?

Guardrails should be specific and measurable. For example:

Halt if bounce rate increases by more than 15%

Halt if form abandonment exceeds 40%

Halt if customer complaint volume doubles

Having clear guardrails avoids any surprises or panic if there is a sub-optimal result.

Build the Experiment

Most experiments require a hybrid team of specialists to build. Depending on the level of sophistication and complexity, this may include copywriters, engineers, designers, data scientists, and product managers.

It’s not always possible to have a dedicated team available to create experiments. In such cases, consider experiments that are scoped to limited areas of functionality or experience. Your CMS platform may have built-in A/B testing capabilities, allowing you to test alternative call-to-action buttons with only transcreation support. Your website may facilitate dedicated landing page creation without engineering resources, enabling fast iteration.

The key is to start; even small-scale experiments will build your team’s experimentation muscle.

With your experiment built and guardrails established, it’s time to launch and monitor results.

5. Execute & Analyze

Traditionally, experiments are run against a target cohort, with a control group for comparison. For hyper-localization experiments, this isn’t always practicable. The throughput of traffic may not warrant sub-dividing the audience into experiment vs. control. Also, the complexity of creating, monitoring and analyzing both groups can be substantial - without dedicated data scientist help, it’s often a non-starter.

For international growth purposes, you may make a decision to take a more pragmatic “do no harm” approach to your experiments.

Given the more limited audience canvas, resource capabilities and time frame, a “do no harm” approach suggests that any and all net-positive experiments will be considered successful. This explicitly lowers the threshold for success by removing the requirement for true statistical significance (which could take months for some international markets with low-medium traffic).

Assuming an experiment timeframe of 8 weeks: compare your treatment metrics to the pre-experiment baseline. Look for: (1) directional movement toward your targets, (2) absence of negative guardrail triggers, (3) consistency across the measurement period.

Since your experiments are based on an opinionated assessment of market experience improvements, your focus shifts to understanding which changes deliver the most value. Should you focus entirely on landing page optimization or local content creation? Should you increase local payment method support for key markets or adapt pricing? Targeted experiments will answer these questions for each market.

Conclusion

Building an experimentation practice for international growth takes time and discipline. Your first experiment won’t be perfect, and that’s precisely the point. The goal is to build the muscle: to develop the instinct for what questions to ask, what metrics matter, and what insights to extract from the data.

Start with a single hyper-localization experiment in your highest-potential market. Use the template, follow the process, and embrace whatever outcome emerges. Failed experiments often teach more than successful ones.

Over time, experimentation will become the foundation for how your team approaches international growth, moving from gut feel and assumption to data-backed decisions that compound into sustainable, scalable growth.